The Jaybridge Challenge 2013 is now over and from a technical perspective it went flawlessly. I am pretty thrilled with the performance of the site. The challenge itself was interesting, a well designed problem that allowed a wide range of solutions. The only downside was the small number of contestants. I would estimate 1/50 people who learned about the competition looked at the site and about 1/50 of those signed-up. Of those 1/2 submitted a solution. So for next time, plan on spending more effort on publicity and perhaps time the competition with school holidays of some sort.

Adding Fields to the live database:

During the competition I discovered a bug in my calculation of the leaderboard rank for tied scores (which occurred since the trivial solution generates a repeatable score and pretty much everyone will log that for their first score). Instead of using the submission time to differentiate the leader in case of a tie, I was using the user’s signup time. During beta testing, users signed up and then got a trivial solution in a short time, so the sorting appeared to work fine. When I noticed the bug during the competition, I realized is should have been tracking the submission time along with the submission id and score in the best score database. Making this change on the live site required adding some fields to the database, which was a bit scary, since I hadn’t tried something like this before. (In retrospect, I would say that it’s pretty similar to lots of backwards compatibility things I’ve done in the embedded world. I like when that stuff translates.)

Code Steps:

- Add new field to the entity constructor

- Write a function to “massage the db”, adding in values for the new field to entries that don’t have it and create a handler from some url to trigger this massage.

- Update the leaderboard code to use the new database field.

Test Deployment Steps:

I tested the changes as I went on my development server and all seemed to be going well. I then deployed the change on our beta-test site and discovered several problems (the first 2 related to the initial default values not apparent on development server tests):

- Corner case of best_score == 0 wasn’t handled correctly (because in my test submissions I hadn’t realized this was a valid score.)

- Invalid submission id’s not checked for.

- The leadboard queries stopped working for a time, while the new database indexes were building, making the site nonfunctional for a few minutes, which I deemed unacceptable.

Live Competition Site Deployment:

Based on the test site deployment, I fixed the 2 bugs and then broke the deployment down into a few steps as follows.

- Deploy to the live site the changes to the db constructor and the massage functionality only.

- Hit the “massage db” url, so that all new data in the db is populated and wait a bit for indexes to be created.

- Deploy the change to the leaderboard to use the new database information.

And wallah! Flawless deployment, I was pretty grateful to have the extra layer of testing provided by our beta-test site. It was definitely kinda scary to risk a change like this while the competition was live, but it went very well and I learned something about making db changes like this, enough to get this job done.

A note on cost:

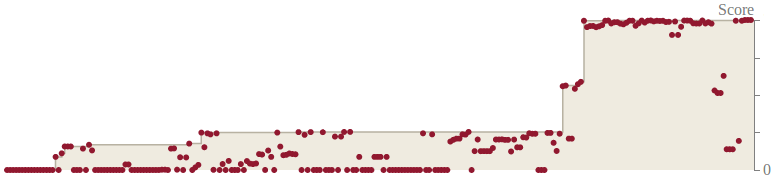

Regarding the operating cost/optimization/developer time trade-offs it was interesting to see the challenge didn’t get enough interest to run up any significant costs. We did accrue ~10 cents of database queries beyond the free quota. I definitely hadn’t anticipated the number of submissions that some contestants would make. Some appeared not to be doing any basic validation on their submissions locally (I suppose some didn’t have Ubuntu VMs to test on and didn’t think to set them up), which meant for one Java user ~90 submissions which failed to execute in some way before getting a score. One team used some randomness in their algorithm and so would submit the same entry multiple times for different scores, with nearly 250 submissions in total. I wasn’t caching the table of each users submissions, even if I had I probably wouldn’t have thought initially to incrementally update the cached value and minimize the big queries, since it hadn’t occurred to me that any single user would make so many submissions, certainly none of our beta testers did. ![]() Here are screenshots of the submission history for the users mentioned above (scores anonymized):

Here are screenshots of the submission history for the users mentioned above (scores anonymized):

In the end not spending time optimizing was of course the right thing, since none of that was needed. Also I would have guessed wrong about where the high use was going to be.