We are beta testing the programming competition site I built for an upcoming Jaybridge Robotics recruiting event. Yesterday the site went down and pretty much all page queries returned a 503 sever error. Looking in the log history even simple GET requests like favicon.ico where timing out:

Info: 2013-01-15 10:21:06.818

This request caused a new process to be started for your application,

and thus caused your application code to be loaded for the first time.

This request may thus take longer and use more CPU than a typical

request for your application.

Investigating this warning revealed what many developers of low traffic sites have deemed an un-usable flaw with GAE. A flip side of the benefit that GAE provides of automatically scaling up your site if you have increased traffic, is that they will also shut down your site if there isn’t any traffic, which means requests may require your entire process to be started before requests are serviced. Since there is a 30second timeout on requests, if your app’s startup is slow (especially a problem with java, but shouldn’t apply so much to this python app) users will see greater latency or errors if the request can’t be serviced in time.

This “feature” is core to the GAE service, but they now offer services to paid subscribers to minimize this risk. You can pay for a minimum number of idle instances which will ensure you app is always ready to serve new requests. There is also an “Always On” feature which should help. I will update this when I switch to the paid service and learn more about it.

Another solution not recommended by google is to keep your site warm by regular queries of some sort, this is not desired as a waste of bandwidth and google would rather turn you off and spool up to save resources understandably.

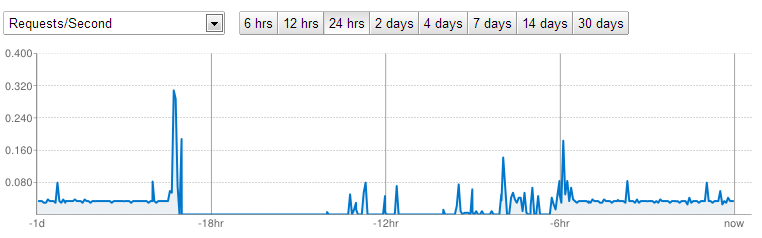

So yesterday my site when down, but I hadn’t made any changes and was not sure why. I am still not quite satisfied (but it is working well again now). Looking at the traffic to the site I saw it dropped to nothing during the time the site was down, -20hrs to -6hrs in the graph below:

Some requests were further logging this disturbing warning as well:

Warn: 2013-01-15 10:21:06.818

A problem was encountered with the process that handled this request,

causing it to exit. This is likely to cause a new process to be used

for the next request to your application. (Error code 121)

In the end it looks like it was an app engine problem and not something on my end, lots of other people had the same Error code 121 problem during the same window and their apps having the same issues.

The problem was apparently temporary and I haven’t seen anything since. With regard to spooling up new instances of the app, since we had grading servers checking for new submissions periodically by design, this wasn’t an issue for us. We also upgraded to the paid version and requested 1 idle instance, which should additionally mitigate the issue.