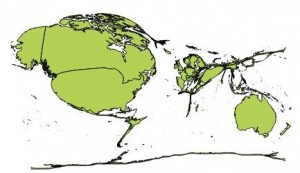

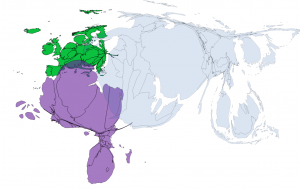

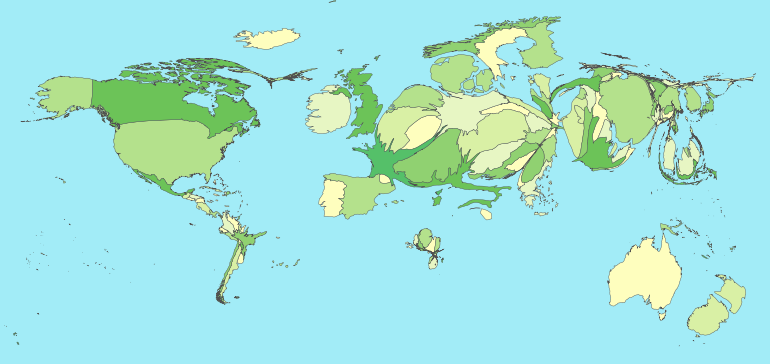

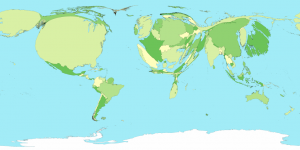

As part of a cartogram generating project I need to get data from a .xls file into a shapefile for use with ScapeToad. Reading the excel file is easy with xlrd. Shapefiles? that is new territory.

Shapefile resources:

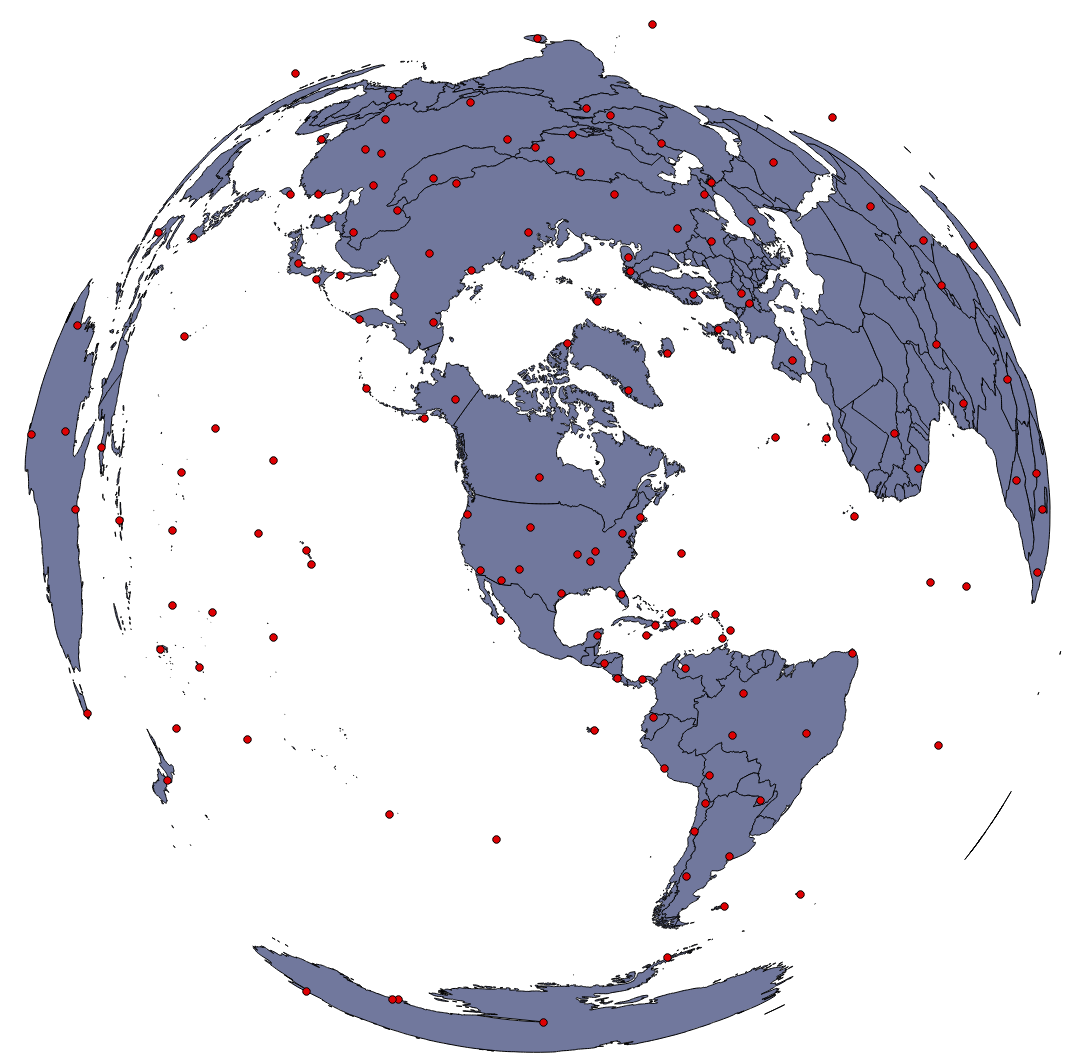

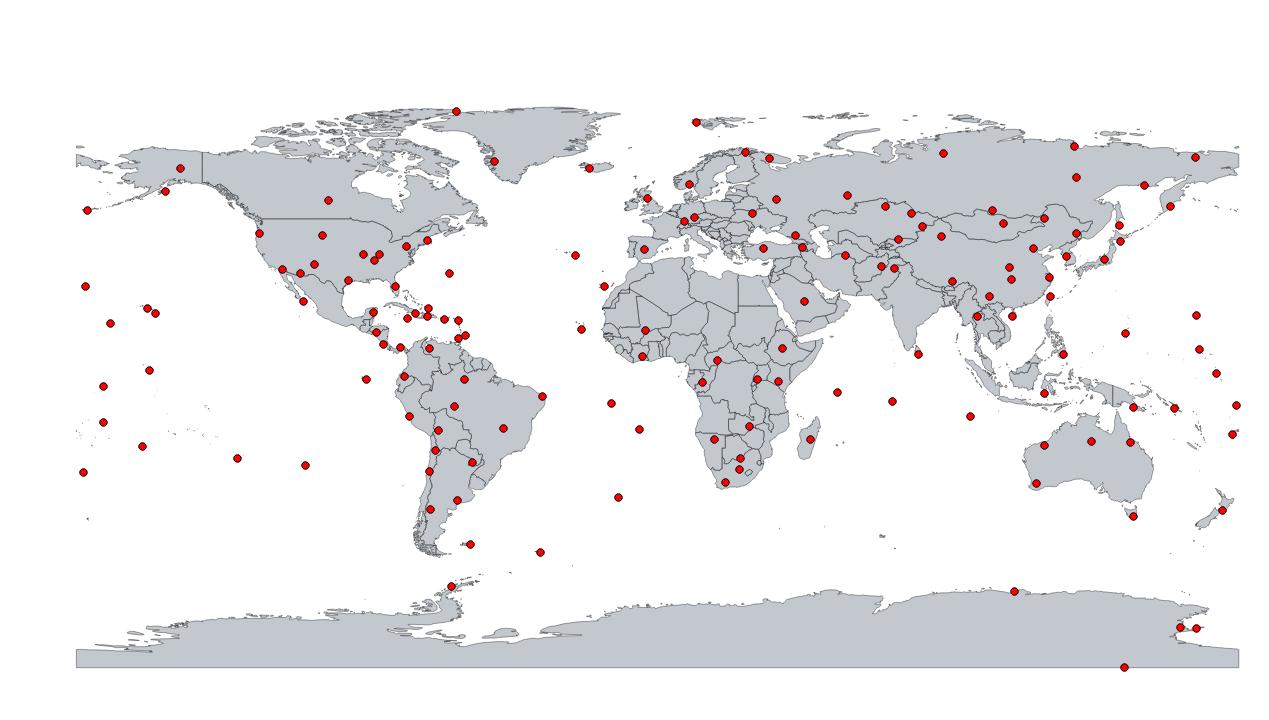

ScapeToad requires a high quality shapefile as input. I tested two that worked fine, both include population data suitable for testing:

- Thematic Mapping: http://thematicmapping.org/downloads/world_borders.php TM_WORLD_BORDERS_SIMPL-0.3.zip. Which is licensed under the Create Commons Attribution-Share Alike License. This license is not appropriate for commercial use and the author didn’t respond to my question regarding other licensing options.

- Natural Earth: On a tip from Andy Woodruff, I switched to using Natural Earth shapefiles which are licensed completely in the public domain, suitable for anything you want! Note, I discovered that the most zoomed out Natural Earth file, “ne_110m”, didn’t have shapes for some of the smaller island countries in my data set, so switched to using the “ne_50m” versions which included everything I needed.

Next step, getting custom data into the shapefile.

Using pyshp to add data to a shapefile

Since I do most of my data processing in python anymore, I was happy to find a python module for read/write/editing shapefiles. Unfortunately, pyshp.py is not the best maintained module. I used pyshp version 1.1.4, because it was packaged in ubuntu. After discovering a number of bugs I realized they have already been reported but nothing significant seems to have been fixed in 1.1.6. So I will just document the workarounds I used here.

1st pyshp 1.1.4 workaround: Renamed the shapefiles to remove any dots in the file name (the 0.3 in the case of thematic mapping shapefiles) because pyshp can’t handle extra dots in the file name.

This is kinda a nuisance since there are 4 files in a “shapefile”. This command will rename the extensions “dbf”, “prj”, “shp” and “shx” all at once:

for a in dbf prj shp shx;do mv TM_WORLD_BORDERS-0.3.$a TM_WORLD_BORDERS_dot_free.$a;done

2nd pyshp 1.1.4 workaround: Massage numeric data you are adding to a record to have the correct precision.

My whole reason for using pyshp is to add data from excel into the shapefile. This means adding fields to identify the data and then adding the data to the record for each shape. The format of the new attributes (a.k.a. fields) is well described here. In my case I want to add numbers for example: sh.field(‘MY_DATA’, ‘N’, 6, 3). The number args are width and precision, where width is the total number of characters to represent the number and precision is the number of characters after the decimal. The above (6,3) can encode: -1.234 and 98.765.

Note, pyshp will error (AssertionError assert len(value) == size) if you put data into the record with greater precision than specified (it will not truncate for you). I used a simple hack below to get a precision of 3 for my data:

def precision3(n):

''' Force argument to precision of 3'''

return float('%0.3f'%n)

3rd pyshp 1.1.4 workaround: When adding a new data field, pad all the records with default data for the new attribute.

pyshp assumes when saving the file that the data is perfectly formatted, but doesn’t help too much when adding or deleting data. Records are stored in python as a list of lists, when the shapefile is written pyshp assumes that the records lengths equal the number of fields (as they should be). But it is your job to make sure this is true (if not the records will wrap around and become non-sense). Q-GIS is useful for inspecting shapefile attribute tables to discover issues and verify that your new shapefile works in an independent reader.

In my case data wasn’t available for all countries, so I padded with a default value (appended to the end of all records when adding the field) and then looped through and put the correct data in the records for which data was available.

Example here adding a new field for Numeric data and default data to all records. All my data is non-negative, so magic number “1” is for the decimal point.

def addField(name, widthMinusPrecision, precision = 3, default = 0):

sf.field(name, 'N', widthMinusPrecision+precision+1, precision)

# add default data all the way down to keep the shape file "in shape"

for r in sf.records:

r.append(default)

return

4th pyshp 1.1.4 workaround: delete() method doesn’t work, don’t use it.

Each shape is described by two pieces of data, linked together based on their index. When deleting a shape, both the record (with the meta data) and the shape (with coordinates etc) must be removed. If only one is deleted pyshp will add a dummy entry at the end and many of your records and shapes won’t line up anymore. To delete a shape, you must delete both the shape and the corresponding record. The delete method doesn’t do this, don’t use it, do it yourself:

def deleteShape(n):

del sf._shapes[n]

del sf.records[n]

return

5th pyshp 1.1.4 workaround: Handle non-ascii character encodings yourself

pyshp doesn’t declare a character encoding when reading files, so they default to “ascii”. If you are using the Natural Earth shapefiles they have non-ascii characters and are encoded in Windows-1252. (See previous post for more info about the Natural Earth encoding.) I worked around this by looping over the records and encoding all strings to unicode:

for r in sf.records:

for i,f in enumerate(r):

if isinstance(f, str):

r[i] = unicode(f,'cp1252')

And then reversed this before saving the file via:

for r in sf.records:

for i,f in enumerate(r):

if isinstance(f, unicode):

r[i] = f.encode('cp1252')

6th pyshp 1.1.4 workaround: When looking at sf.fields adjust the index by one to ignore ‘DeletionFlag’

pyshp adds an imaginary field for internal state tracking to the beginning of the fields list. If you are looking up field names in this list to find indexes, you should correct your indexes accordingly, there is not actually a field called ‘DeletionFlag’.

Conclusion:

After working around these bugs and massaging my country names to map from .xls to the names in the shapefile (17 cases of “Bolivia (Plurinational State Of)” == “Bolivia”) , I was able to use pyshp to generate a new shapefile with my data in it! Next up, cartogram-orama.